It was well over a year ago that I wrote about being worried about Tesla FSD. I have not updated that article, because it continues to ring very true. Some people like to keep saying the same thing over and over again, but not me.

But, I do think I see a new twist to the Tesla FSD landscape. Here is what I see:

- Tesla is very far from delivering a fully autonomous car. (Not new)

- [NEW!] People are conflating the Tesla FSD feature with a fully autonomous car.

- Elon is happy to let people live in this confusion and even fuels it with his tweets. (Not new)

Let’s look at each of these briefly.

Tesla is very far from delivering a fully autonomous car.

We will need some definitions to get anywhere in this discussion. Regarding an autonomous car, we could try to use the SAE 5 Levels, but please see my previous post about the Levels of Automation being stupid. I will switch over to my own “autonomous categories” of Computer Assisted and Computer Managed, which are based on who is responsible for the actions of the vehicle. In Computer Assisted, the human driver always has the direct responsibility for the vehicle, like Tesla FSD, most advanced cruise control systems, and Comma.AI devices. In Computer Managed, the corporate entity supplying the vehicle has that responsibility, like Waymo and Cruise.

A Computer Assisted car is when the human driver is ultimately responsible regardless of how much assistance the computer is giving. And, of course, Computer Managed, is when the automation provider has the responsibility. This then brings up an interesting question about how one specific vehicle could transition between the two categories, but please see my other article for some discussion about that topic.

What does Tesla Full Self Driving mean?

Tesla and Elon have been very ambiguous about autonomous driving cars. I am a Tesla fan, but the lack of detail with regard to autonomous driving is mind-blowing to me. So I try to make sense of it. Elon must know that a totally autonomous Tesla vehicle with zero driving constraints is impossible for a long time. And the real question is, “What will be the constraints under which a Tesla vehicle is allowed to drive autonomously?”

Also, there is a question, “Does a Tesla vehicle driving under the full responsibility of Tesla, as opposed to the responsibility of the human driver (see Computer Managed vs. Computer Assisted as mentioned above), have the ability to transfer the driving responsibility to and from the human driver?” And if so, under what circumstances? With the current Tesla FSD, it has been implied that at some point the computer will advance to the point that it can take full control and responsibility, but this has not been said by Tesla.

The only way that Tesla’s and Elon’s lack of specificity around autonomous driving makes sense is that Elon (and others?) have decided that this is the best business practice at this point in time. This of course feeds both the Tesla fans who feel that something magical is coming. And it also feeds the critics who say that Elon is lying.

I now think it is time for Tesla fans to see reality. Don’t let yourself be fooled.

What is Tesla FSD?

This is not difficult to find out. Just look on their website (shorted below). Tesla Full Self-Driving Includes:

- Autopilot (On all Tesla Cars)

- Traffic-Aware Cruise Control: Match speed to traffic

- Autosteer: Assists in steering within clearly marked lanes

- Enhance Autopilot (Available separately from FSD)

- Navigate on Autopilot: Interstate lane changes, interchanges, turn signal, and exits.

- Auto Lane Change: Assists in moving to an adjacent lane on the highways.

- Autopark: Helps with parallel or perpendicular parking.

- Summon: Moves your car in and out of a tight space using the mobile app or key.

- Smart Summon: Navigate more complex environments like parking lots.

- Full Self-Driving Adds

- (Beta) Traffic and Stop Sign Control: Identifies and responds to stop signs and red lights.

- (Beta) Autosteer on city streets.

Tesla’s fine print for FSD

Below the features of Autopilot, Enhanced Autopilot, and Full Self-Driving there is the following statement.

“The currently enabled Autopilot, Enhanced Autopilot and Full Self-Driving features require active driver supervision and do not make the vehicle autonomous. Full autonomy will be dependent on achieving reliability far in excess of human drivers as demonstrated by billions of miles of experience, as well as regulatory approval, which may take longer in some jurisdictions. As Tesla’s Autopilot, Enhanced Autopilot and Full Self-Driving capabilities evolve, your car will be continuously upgraded through over-the-air software updates.”

This statement clearly states that these current (and beta) features “do not make the vehicle autonomous.” It also teases the term “full autonomy” with no definition or information on the path or timing that will get the car there. It is all left up to the reader’s imagination.

I have recently heard many of the most respected Tesla supporters start to echo Elon’s statements about “achieving Full Self Driving” and I am so disappointed! This includes Rob Mauer (of the Tesla Daily YouTube Channel) and Dave Lee also of YouTube and Podcasts. And it is not limited to them, as many other financial analysts will also use this term, but I don’t expect them to be so uneducated.

We must stop this nonsense!

The term “Full Self Driving” has no good definition. It is used as if it means something like a Computer Managed (see my definition) vehicle, but Tesla has NOT MADE THIS STIPULATION. In fact, they are encouraging us to fool ourselves. In writing, they clearly state that the current and beta features “do not make the vehicle autonomous.” These cars are currently only Computer Assisted (see my definition) vehicles without any indication of how they will go beyond this!

The Difficulty of Autonomous Driving

I’ll end with some situations to try to highlight why I think that autonomous driving is so hard.

Difficult Situations

- There are lots of standard driving situations that are difficult. Just think of when you are in a new town and driving on unfamiliar roads.

- The current camera placement does not allow for perfect vision in all circumstances. As all drivers know, sometimes, you just have to edge out to see around something. The current cameras are not as flexible as your eyes.

- Mud or debris covers up a camera. Our eyes have nerves and a built-in washing station.

- The vehicle comes to a temporary stop sign at a construction area. When does the stop sign have its normal meaning of “Come to a complete stop and then continue.”, versus, the often found construction context of “Stop and don’t go until you get the Slow sign or are told you can go.”?

- A police officer stops a car because of a crime scene and asks the car to detour down an alley.

- Versus: An advertiser in an official-looking uniform directing cars into a store.

- What if a road in the desired direction is partially flooded? How does the car know if it is safe to proceed?

- The car starts making some odd sounds. At what point is there something that requires attention?

The real issue is not the situations that you can list though. It is that totally random things can, and will happen out there. Humans are able to reason about the environment. Computers not so much.

The Best Indicator of Tesla’s Current Progress toward an Autonomous Vehicle

First of all, I hope that Tesla has some really good internal data on their progress toward an autonomous vehicle. It is not surprising that they do not share this data. Also, I will add that as a rideshare (Uber and Lyft) driver myself, I have offered to help them with their ADAS system, but they have not responded to me yet. I plan to keep trying.

In computer systems, there is a term called the “Happy Path.” The happy path is when you interact with a system in the most expected way. No surprises. Although programming is hard, getting the Happy Path to work is often a first step, but it has little to do with a functional system. Traversing the Happy Path really only indicates that in the easiest case, the system can succeed.

In the last few years, there started to be some videos of Tesla FSD actually being able to go from Point-A to Point-B without any interventions. A success. But, I think people put too much into this random “Happy Path” success. I say random because in the case of FSD, these scenarios will never be repeated with the exact same inputs AND on a subsequent try, the system might fail.

Okay, getting through the Happy Path is not really achieving much, but I guarantee that if you cannot get through some random Happy Paths you cannot even start measuring overall success. So, it seems that Tesla may have recently gotten to a point where it is possible to start to measure its progress.

Allowing a Tesla FSD vehicle system to try to act as a rideshare driver (Uber and Lyft) is a great match for gauging its ability as an autonomous vehicle. As a rideshare driver myself, I know there are some special situations that might require changes to how riders and the taxi interact. For example, a rider calls me to say they need to be picked up across the street. Or a rider with a foldable wheelchair needs it placed in the trunk. But we will assume that these will be handled in some appropriate manner. So let’s just look at the driving from A to B, part of Rideshare driving.

Most of us have seen YouTube videos demonstrating FSD. Without a doubt, the system is pretty incredible. And it is clear that it has improved. But, is it improving at a rate that will make it good enough to act as a fully autonomous RoboTaxi?

Is a success rate of 9/10 good enough?

What success rate for RoboTaxi drives would you think is necessary for a well-functioning system? Would you be happy if 9 out of 10 of your Uber rides were successful, 90%? Probably not. How good does the system have to be? Is 99 of 100 good enough? 999/1000? And, we will also need to think not just about the failure rate, but also about the failure mode. Ending in a crash is much worse than having to walk an extra block to get to your destination, or needing to call another Uber.

As I mentioned, I’m a rideshare driver and have been driving for about 5 years. I have done almost 10,000 rides. I can only recall twice not being about to complete a ride. Both times it was because of a flat tire. I’m very glad to say no accidents, but one time I did bottom out in a large hole near the curb that might have been bad if it was a few inches deeper. I have had to cancel at least one ride where the rider want to go further than the range of my EV would allow, but that was done before the ride started.

A 99.9% success rate.

With all this in mind, I suggest that we need to fail fewer than 1 per 1000 rides for RoboTaxis to even have a consideration of viability to get started. And that failure better be a “soft” fail, where the rider can recover even if the car cannot go on.

Real World Data: Where are we with FSD?

There is a YouTube channel CYBRLFT that has been carrying out this rideshare experiment for almost about two years! It is hard for me to believe that he only has about 5,000 subscribers. If you are interested in this topic, you absolutely should follow him and check out some of his RoboTaxi Reports. I’ll link to the latest below.

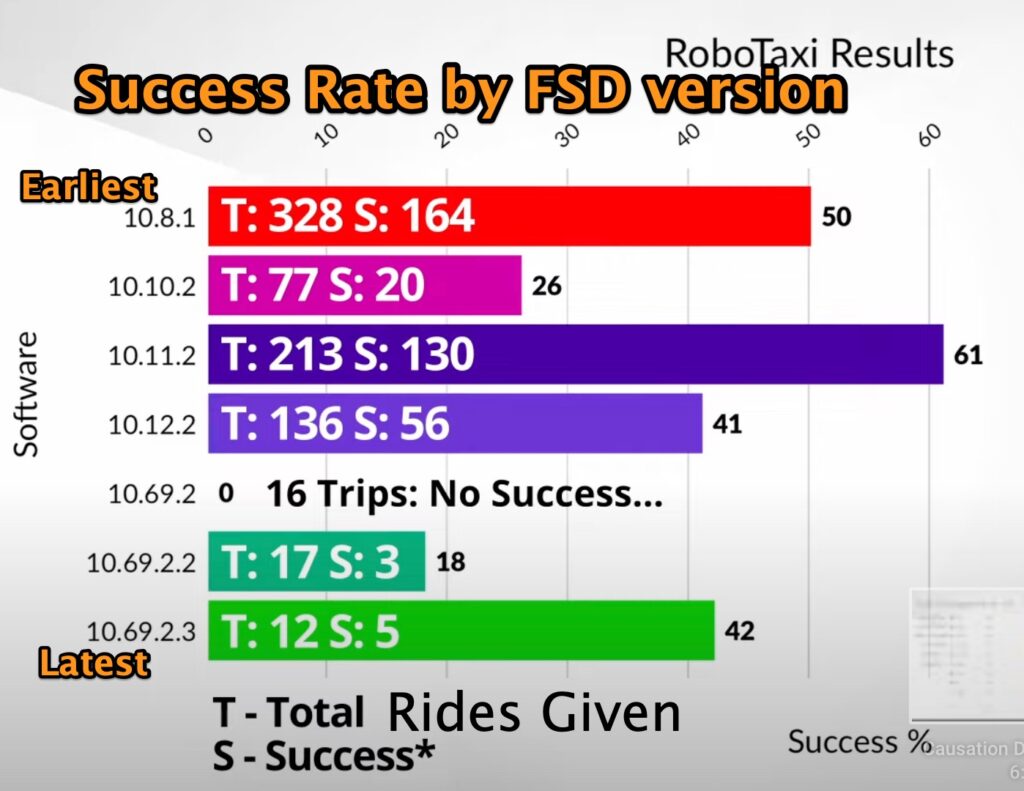

Here is a summary of CYBRLFT’s success rate in taking a random Uber/Lyft rider from their pickup to their destination. Success is only counted if this trip could be done without any help from the driver. This data was meticulously captured over the past 2 years and grouped by the version of the FSD program.

The bars on the graph show the percentage of successful rides for each version of the FSD software. You will notice that he did a lot fewer rides in the more recent versions and there is no control over what rides he was taking. He mentioned because of his job situation he was driving less. This also may mean that he drove at different times or places. It could be that the more recent rides were more challenging.

Is FSD getting better?

So, just looking at this data, there is no indication that FSD is getting better. I think this is really because FSD is just now getting good enough to succeed at all. So, to really understand that FSD is actually getting better, you need to watch some FSD videos, because clearly it is much improved and can handle some much more complex scenarios.

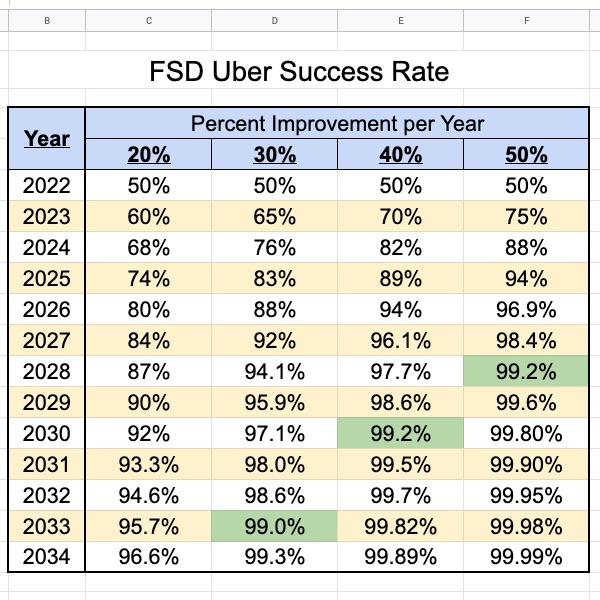

But, how does it get to the 99.9% (or whatever) success rate we would like to see?

Let’s say that FSD is current at a 50% success rate. If it improves by 20% every year, how long to get to an even more modest 99% success rate? Let’s do the math. Turns out that will take over 10 years! Arg!

Looks like to have any chance of getting to even the modest 99% success rate it will take 6 years if it can start improving 50% per year. Yikes!

So, I think this highlights the fact that 1) unrestricted autonomous driving is difficult, and 2) something substantial must change.

My Predictions

I think it is really hard to know how this whole autonomous vehicle thing is going to turn out. It seems Waymo and Cruise are struggling even in their heavily sandboxed environments. Although I see incredible drives with Tesla “in the wild” and clear improvement, I do not believe a successful go-anywhere RoboTaxi is anywhere in the near future.

It will be a slow go. I do think that Tesla will be able to release FSD out of beta within a year or so, but it will continue to be just a Computer Assisted vehicle. I expect they will release a specific RoboTaxi vehicle as a Computer Managed vehicle and start to compete with Waymo and Cruise. But, I do not see either of the vehicles being able to swing from one category of autonomy to the other.

What do you think?