Lately, I’ve been thinking a lot about Tesla’s sideview cameras and how good they are for FSD (Full Self Driving, City Streets). You can see my previous post about a possible location improvement, but that was just kinda for fun. This analysis is for real, and seems to point to a serious limitation.

Can the sideview cameras see far enough to allow a safe FSD (car directed) right-hand turn onto a highway from a stop?

I’m not sure they can. Here is my analysis.

Note: Much of the images for this post are taken from Chuck Cook’s excellent YouTube video “Tesla Experiment on Camera Angles with FSDBeta using Drone View – FSDBeta 9.1 – 2021.4.18.13“. If you don’t subscribe to him, you are missing much of the best FSD content out there.

Scenario

You are at a stop sign and turning right on to a highway.

If the Tesla (turning right) is driving under FSD, can it see far enough up the road to make this turn safely?

Normally, when I make a turn like this, I’d like to do so without interfering with the oncoming traffic.

Analysis

For this analysis, I will assume that traffic is moving at 60 MPH (~100kph) which is a common case in the US with a common 55 MPH speed limit on open access roads.

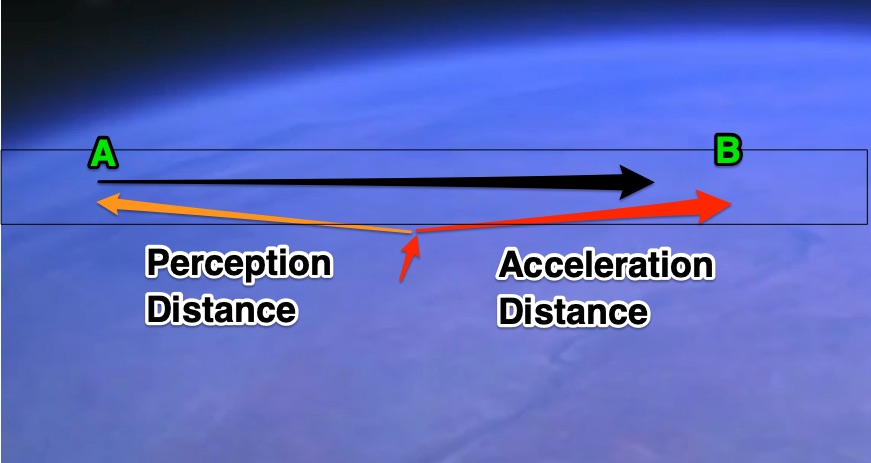

As depicted in this image, the Tesla, turning right and entering the road (red arrows), will accelerate to the same speed as the traffic. The question now becomes, What is the required “Perception Distance” (Orange) required so that the cars would not hit at some point B?

Point B is defined to be the point at which the Tesla reaches the highway speed of 60 MPH, because then it will be moving with traffic and the approaching car would not hit. If we leave no safety spacing and assume constant acceleration, it is fairly easy to show that the “Perception Distance” is the same as the “Acceleration Distance” and each are half of the distance traveled by the on coming car in the worse possible scenario.

I’m not going to rigorously go through the math, but will give a quick validation of the above statement. Under constant acceleration, the Tesla’s average speed will be half of the highway speed, in this case 30 MPH. With the approaching car traveling twice the average speed of the Tesla, it will travel twice the distance in the same amount of time. Therefore, for the cars to just meet at B, the “Perception Distance” plus the “Acceleration Distance” would be equal to the approaching cars travel distance. But we have already said that the “Acceleration Distance” is half the travel distance, so the “Perception Distance” would be the other half, and thus the “Acceleration Distance” and “Perception Distance” would be the same. (Note that this is independent of the highway speed, but does assume a constant speed of the approaching car and constant acceleration of the merging car.)

What is the Required Perception Distance?

The required “Perception Distance” is now easy to calculate based on the highway speed, because it will be half the distance that the approaching car would travel in the time it takes the Tesla to accelerate to the same highway speed.

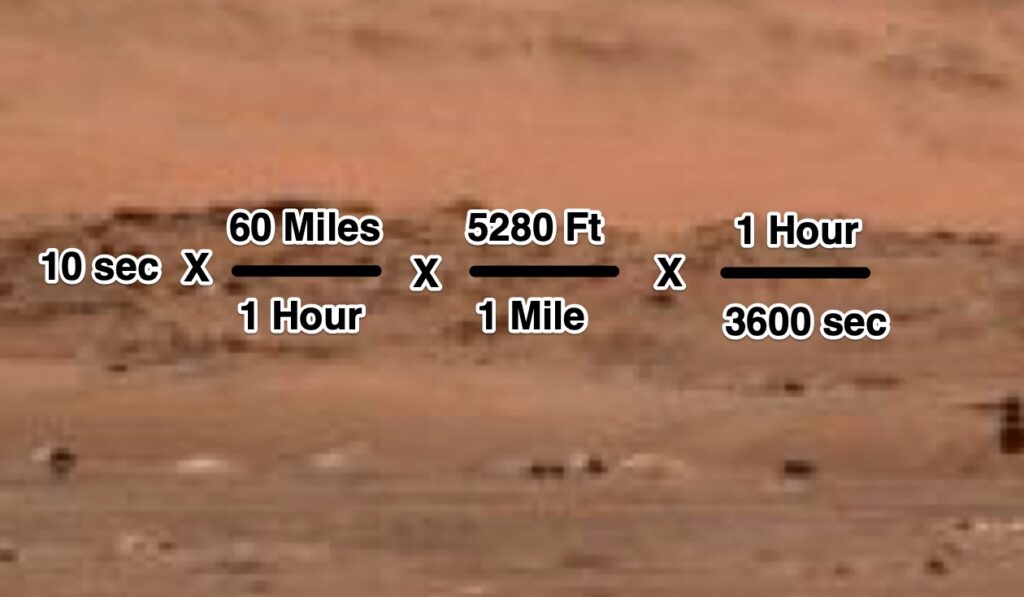

I know that Tesla’s can accelerate very quickly, but first let’s start at a more normal acceleration profile which would be more expected in everyday (and especially FSD) driving. I’ll use 0 to 60 MPH (~100 kph) in 10 seconds. In that 10 seconds, a car traveling 60 MPH will travel 880′ (~268m).

So, the perception distance would need to be half of 880′ which is 440′ (134m). Also, remember that in this analysis, for this perception distance and speed, the cars would meet up at Point B, which in reality you would not want to happen, but perhaps you could say that this is somewhat of a worse case scenario where the Tesla just missed seeing the approaching car and you would be okay with requiring the approaching car to slow a bit. That sounds fine with me. But we are in a Tesla, so let’s push the acceleration a bit more.

At 0 – 60 MPH in 6 seconds, the required perception distance drops to 264′ (~80m). So let’s continue with that number.

How far can the side cameras see?

Telsa’s website list the max range of the side cameras at 80m. Hum? That is convenient! Above we showed we needed at least 80m of perception distance to achieve this merge. Seems (under ideal circumstances) the cameras might just be good enough.

But that is the range of the camera, is the FSD system actually able to “resolve” a car at that range in the real world? By “resolve” I mean, the camera has picked up an image and the FSD computer is able to recognize that this is an approaching car. (Note that here we are NOT asking the FSD computer to estimate the speed of that approaching car, which I assume would take a few more frames and computation.)

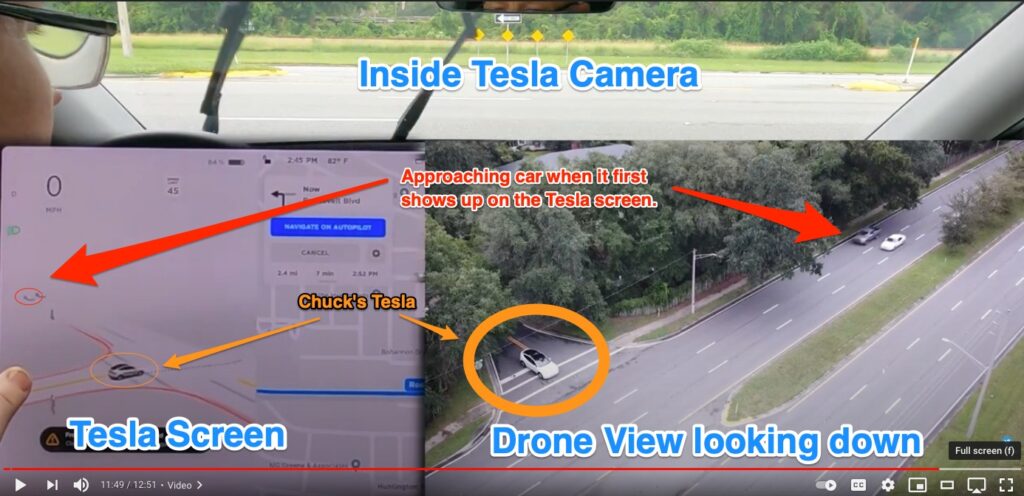

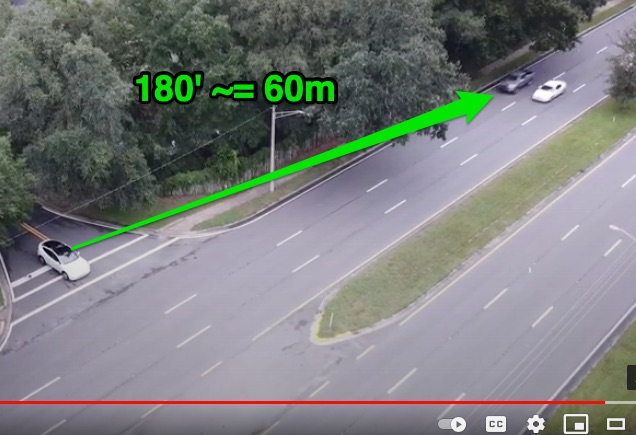

To answer this question, I looked back at Chuck Cooks YouTube video. At 11 minutes and 48 seconds into the video, you see the following image where the car in the inside lane first shows on the UI display. It is possible that the computer “sees” it sooner, but it puts up lots of objects that it has detected, which are not even there, so I will assume that once it is shown as a steady image, the computer has determine that more than likely there is a car there.

Below is an annotated screen shot from Chuck’s video.

There is a ton of information in this screenshot and it may be easier to understand it if you watch the actual video. He has 3 synchronized cameras in this composite. The top of the screen is a camera from within the Tesla looking forward. In the lower left is the Tesla Screen that shows the “Mind of the FSD computer.” In the lower right, he has footage from a drone.

From this image, I estimated when the Tesla first displayed the grey truck approaching on the inside lane. My assumption is that the 2 cameras are pretty well synchronized. The standard for dashed lane markers is 10′ long with 30′ spacing although they could be different (source).

My estimate of the Actual Perception Distance in this case is in the range of 60m. From above, we showed that for traffic at 60 MPH and acceleration of 0-60 MPH in 6 seconds, we actually needed the full 80m of perception to be able ensure we could enter the traffic without interfering with the oncoming traffic.

So from this quick and dirty analysis, it seems that the Tesla system of sideview cameras and processing might not be quite as good as one would want for this scenario. This has me a bit worried.

What do you think?

Be sure to check out Chuck’s YouTube Channel and subscribe for the best FSD beta content available.

Below is the YouTube video that inspired this post.